Google’s Secure AI Framework

(SAIF)

The potential of AI, especially generative AI, is immense. As innovation moves forward, the industry needs security standards for building and deploying AI responsibly. That’s why we introduced the Secure AI Framework (SAIF), a conceptual framework to secure AI systems.

Six core elements of SAIF

-

Expand strong security foundations to the AI ecosystem

-

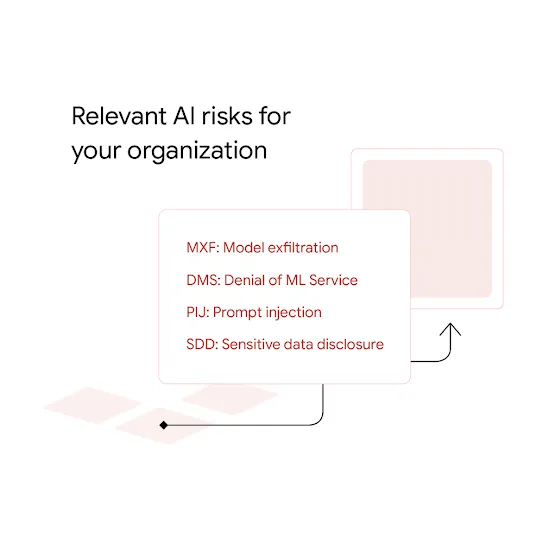

Extend detection and response to bring AI into an organization’s threat universe

-

Automate defenses to keep pace with existing and new threats

-

Harmonize platform-level controls to ensure consistent security across the organization

-

Adapt controls to adjust mitigations and create faster feedback loops for AI deployment

-

Contextualize AI system risks in surrounding business processes

Enabling a safer ecosystem

Additional resources

Common questions

about SAIF

Google has a long history of driving responsible AI and cybersecurity development, and we have been mapping security best practices to AI innovation for many years. Our Secure AI Framework is distilled from the body of experience and best practices we’ve developed and implemented, and reflects Google’s approach to building ML and generative-AI powered apps with responsive, sustainable, and scalable protections for security and privacy. We will continue to evolve and build SAIF to address new risks, changing landscapes, and advancements in AI.

See our quick guide to implementing the SAIF framework:

- Step 1 - Understand the use

- Understanding the specific business problem AI will solve and the data needed to train the model will help drive the policy, protocols, and controls that need to be implemented as part of SAIF.

- Step 2 - Assemble the team

- Developing and deploying AI systems, just like traditional systems, are multidisciplinary efforts.

- AI systems are often complex and opaque, include large numbers of moving parts, rely on large amounts of data, are resource intensive, can be used to apply judgment-based decisions, and can generate novel content that may be offensive, harmful, or can perpetuate stereotypes and social biases.

- Establish the right cross-functional team to ensure that security, privacy, risk, and compliance considerations are included from the start.

-

Step 3 - Level set with an AI primer

- As teams embark on evaluating the business use of AI, and the various and evolving complexities, risks, and security controls that apply, it is critical that all parties involved understand the basics of the AI model development life cycle , the design and logic of the model methodologies, including capabilities, merits, and limitations.

-

Step 4 - Apply the six core elements of SAIF

- These elements are not intended to be applied in chronological order.

Stay tuned! Google will continue to build and share Secure AI Framework resources, guidance, and tools, along with other best practices in AI application development.

Why we support a secure AI community for everyone